Task Queue Concepts

The ClearConnect platform uses a bespoke task queue that makes efficient use of available threads. Real-time data systems require tasks in different contexts to be executed in-order; many systems designs end up having single-threaded executors to handle task processing for a single context, leading to a proliferation of threads.

The ClearConnect platform addresses this by having a pool of threads that execute tasks from a specialised task queue that manages two genres of tasks:

- Sequential tasks; these are tasks that are maintained in sequence with respect to their context.

- Coalescing tasks; these are tasks that can replace earlier, un-executed tasks, in the same context.

Both task genres declare the context they are bound to. These two task genres ensure in-order execution of tasks and skipping of old, out-of-date tasks using a single pool of threads. By sizing the thread pool to be a function of the available cores, a very efficient task processing engine exists that does not suffer (too much) from thread context switching.

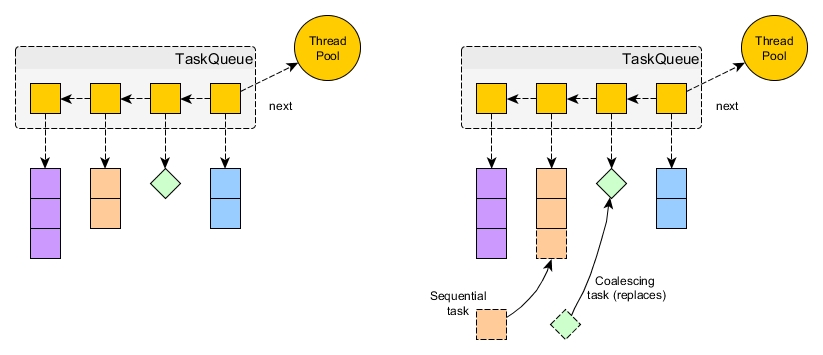

The diagram below illustrates the composition of the task queue. The queue can be thought of as being a linked-list of task contexts. Each task context has its own internal queue of tasks that are bound to the same context. In the diagram, there are four task contexts in the queue diagram, three are sequential and one is coalescing (the diamond).

When a sequential task is added to the task queue, it is added to the end of the internal queue for its matching task context. This ensures in-order execution of all the tasks in that context. In contrast, a coalescing task will simply overwrite its entry in its task context.

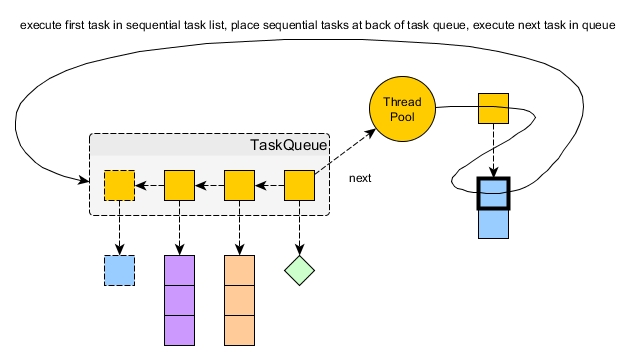

On execution of a sequential task context, the first task in the task context’s internal queue is removed and executed by a thread in the pool then the entire task context is re-added to the back of the task queue and the next task context is taken from the queue. This ensures that all task contexts are given equal time for execution (so a task context with a large internal queue will not starve other task contexts).

As the thread pool number increases, so does the through-put of task context execution and a maximum execution parallelism of task contexts equal to the number of threads in the pool.

The threads in the thread pool associated with the task queue are known as the "core threads" and are called fission-coreX, where X is the thread number. There is only one thread pool and task queue per virtual machine (VM). This is a key concept as it means that all proxy and service instances running in the same VM share the processing throughput of the core threads.